Lab Streaming Layer (LSL) is an open-source middleware ecosystem designed to stream, receive, synchronize, and record neural, physiological, and behavioral data across diverse sensor hardware. By bridging LSL with SightLab VR, researchers can seamlessly incorporate third-party EEG, eye-tracking, and physiological data into their virtual reality experiments, as well as export VR-derived metrics to external acquisition software.

For more information see this page

LSL provides a unified interface for real-time data transmission:

For a complete list of supported devices and applications, visit the Lab Streaming Layer device list.

Before integrating LSL with your SightLab project, install the Python bindings:

# In the Vizard IDE: Tools → Package Manager → Add Package

pip install pylsl

Then, in your script’s imports:

from pylsl import StreamInlet, StreamOutlet, resolve_stream

import sightlab_utils.sightlab as sl

from sightlab_utils.settings import *

To ingest external biosignals (e.g., EEG from Muse or BrainVision) directly into your VR session:

Discover the Stream# Wait up to 5 seconds for an EEG stream

streams = resolve_stream('type', 'EEG')

inlet = StreamInlet(streams[0])

sightlab.addStreamInlet('EEG', inlet)

See ExampleScripts/LSL_Sample/lsl_sample_receive.py for a complete implementation, including averaging and experiment-summary integration.

Export VR-derived metrics—such as gaze coordinates, head orientation, or pupil diameter—to external analysis tools:

Create an LSL Outlet

from pylsl import StreamInfo, StreamOutlet

info = StreamInfo('SightLabGaze', 'Gaze', 3, 0.0, 'float32', 'sightlab123')

outlet = StreamOutlet(info)

sightlab.addStreamOutlet('Gaze', outlet)

outlet.push_sample(gaze_pos)

See ExampleScripts/LSL_Sample/lsl_sample_stream.py for generic outlet setup.

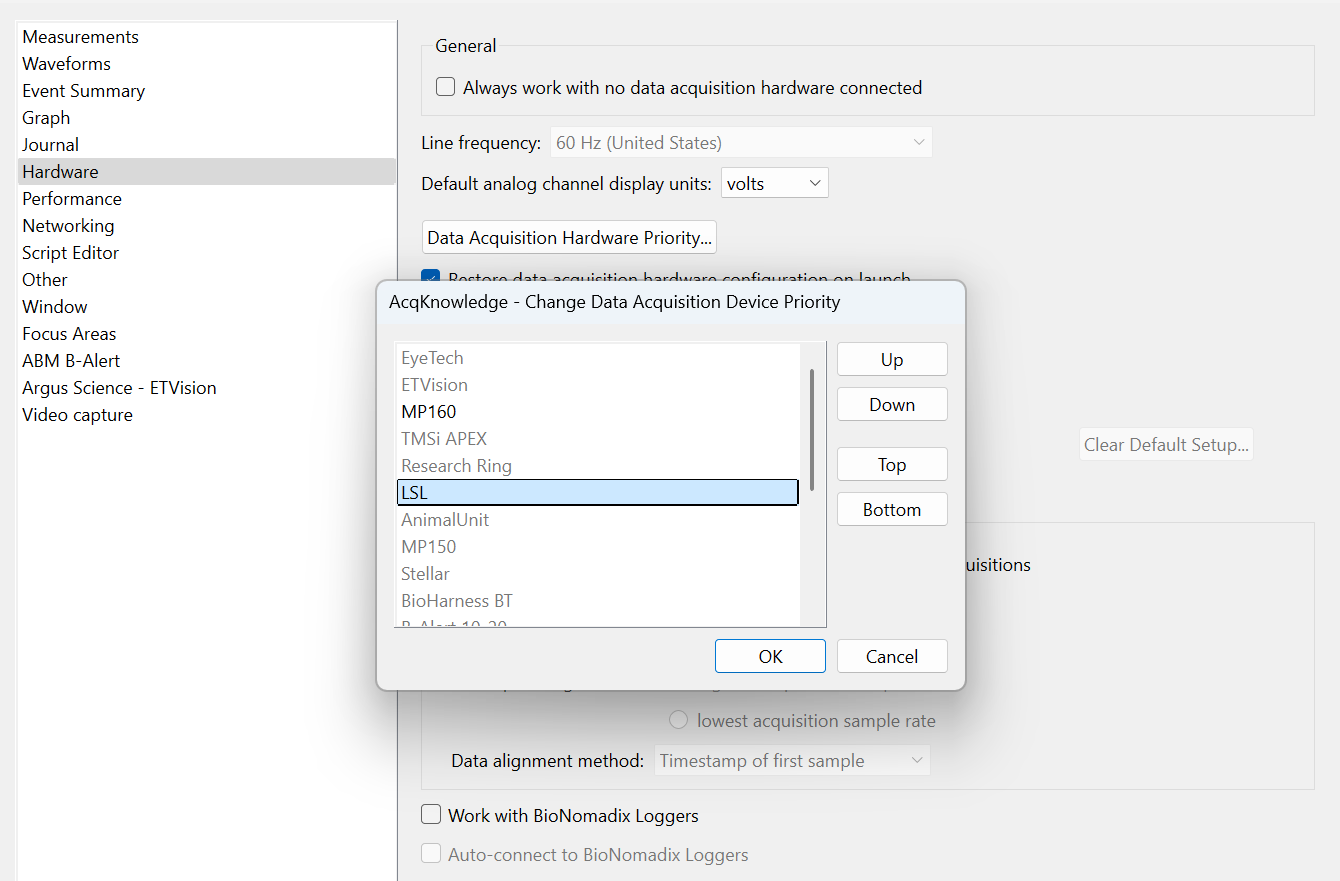

AcqKnowledge now natively supports LSL enabling VR-derived streams to be captured alongside physiological channels.

By leveraging LSL within SightLab, researchers gain a versatile pipeline for incorporating and exporting rich, synchronized biosignal and behavioral data. Whether streaming in EEG for closed-loop VR paradigms or pushing gaze and kinematic metrics to Biopac AcqKnowledge, the integration empowers complex, multimodal experiments with precise timing and minimal overhead.