Multi-user VR experiments represent one of the most powerful directions in behavioral science, training, and immersive analytics. SightLab VR Pro makes this accessible by providing a robust multi-user architecture combined with deep data collection capabilities.

With server-client synchronization, customizable avatars, and integrations across eye tracking, physiological sensors, and replay systems, researchers can now study human interaction in shared virtual spaces with unprecedented realism.

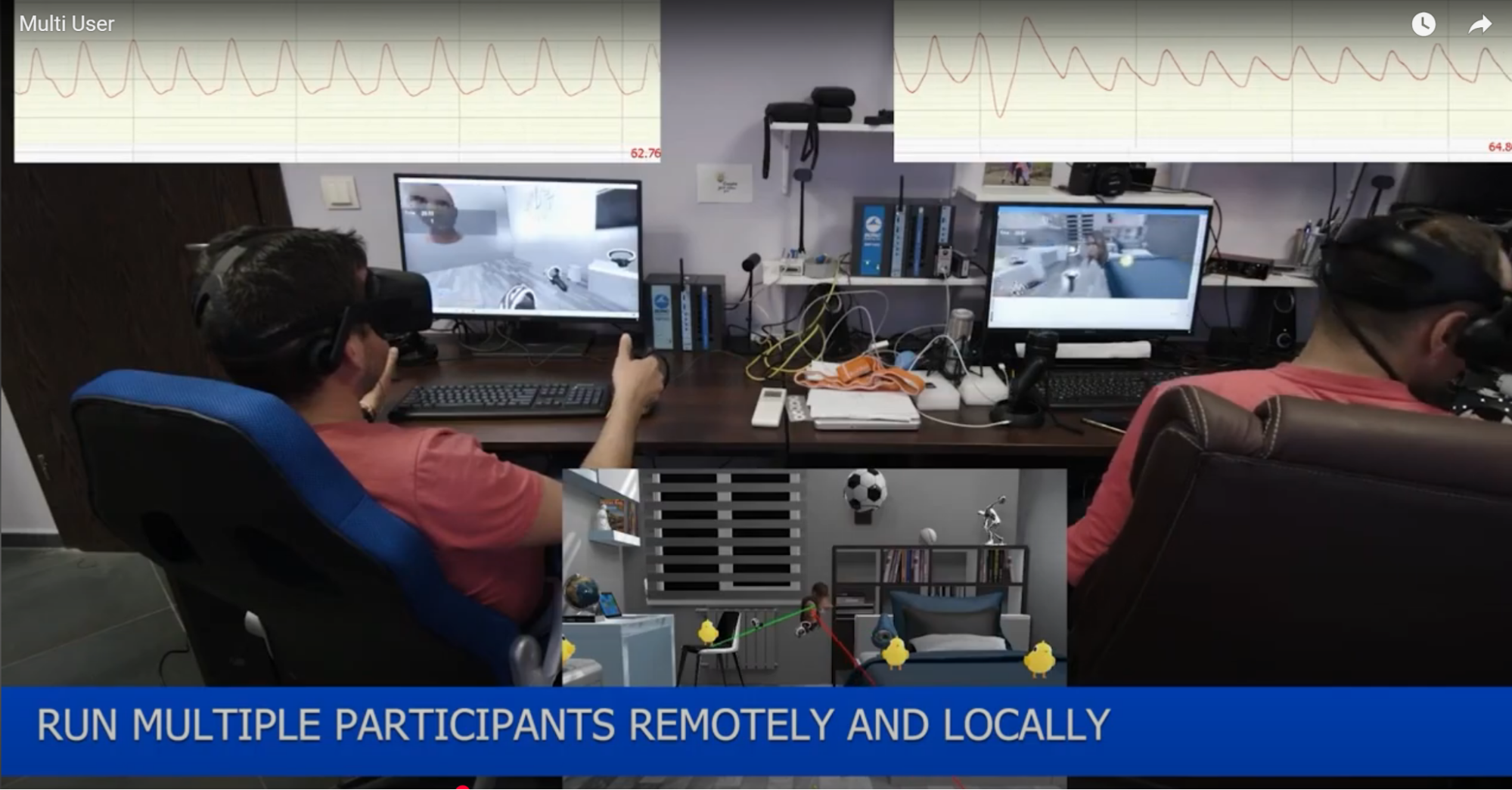

SightLab supports both server and client roles. A session is launched by running the SightLab VR Server, and participants join via SightLab VR Client. Each client can use eye-tracked or non-eye-tracked headsets, and once connected, avatars are assigned (with names, tags, or color coding). Both the server and clients can view others in real time, while the server has a bird’s-eye view for monitoring and recording. Clients can be located both locally or remotely.

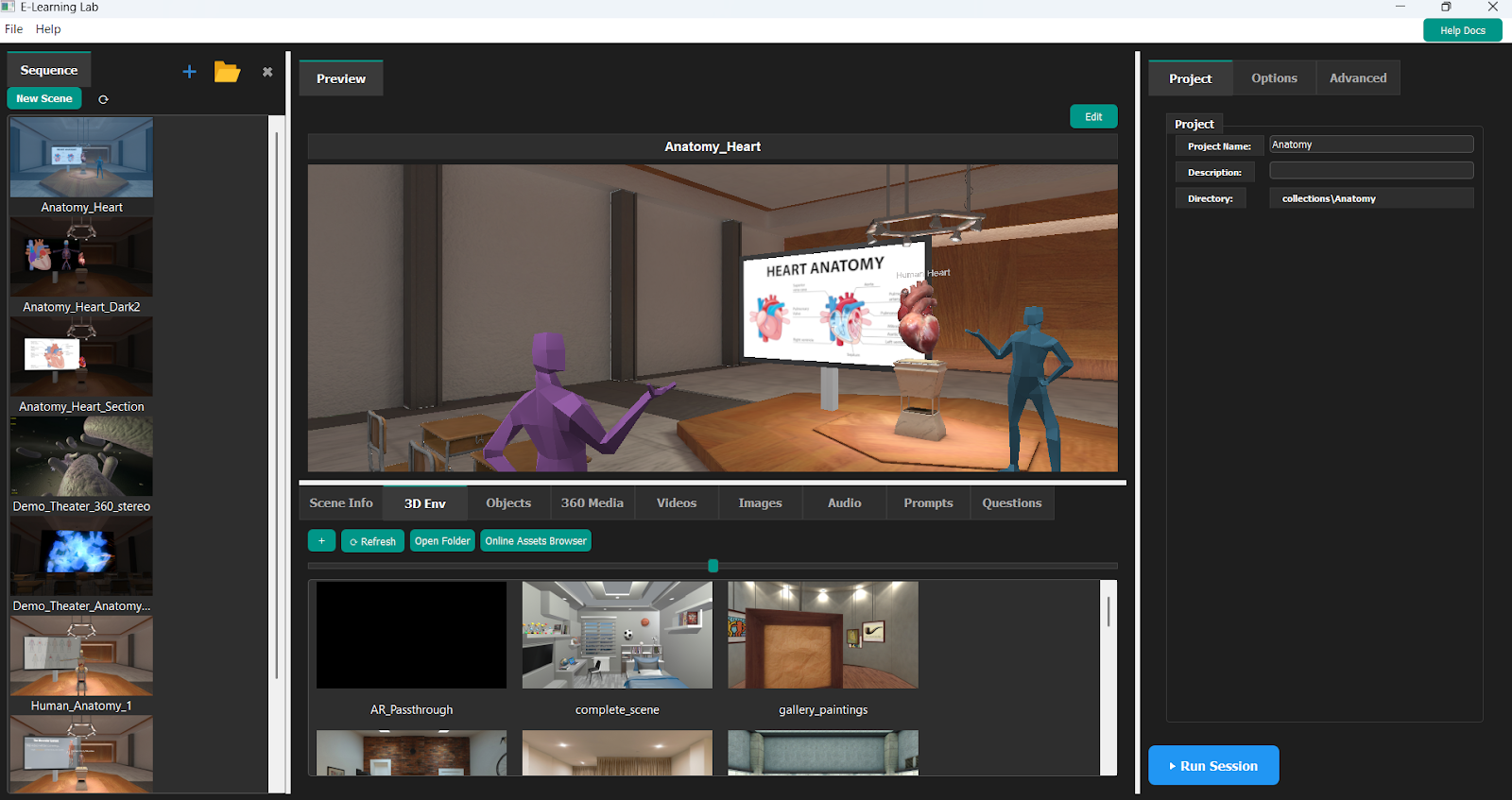

Beyond simple co-presence, SightLab includes Presentation Mode, where an instructor or facilitator can control trial flow for all participants inside VR. This makes it suitable not just for research, but also for education, therapy, and collaborative training.

Eye tracking is fully integrated into multi-user sessions. Once recording begins, SightLab logs fixation counts, dwell times, saccade metrics, gaze paths, and pupil measures, all synchronized across participants. Even in non-eye-tracked headsets, head position is used for comparable analytics.

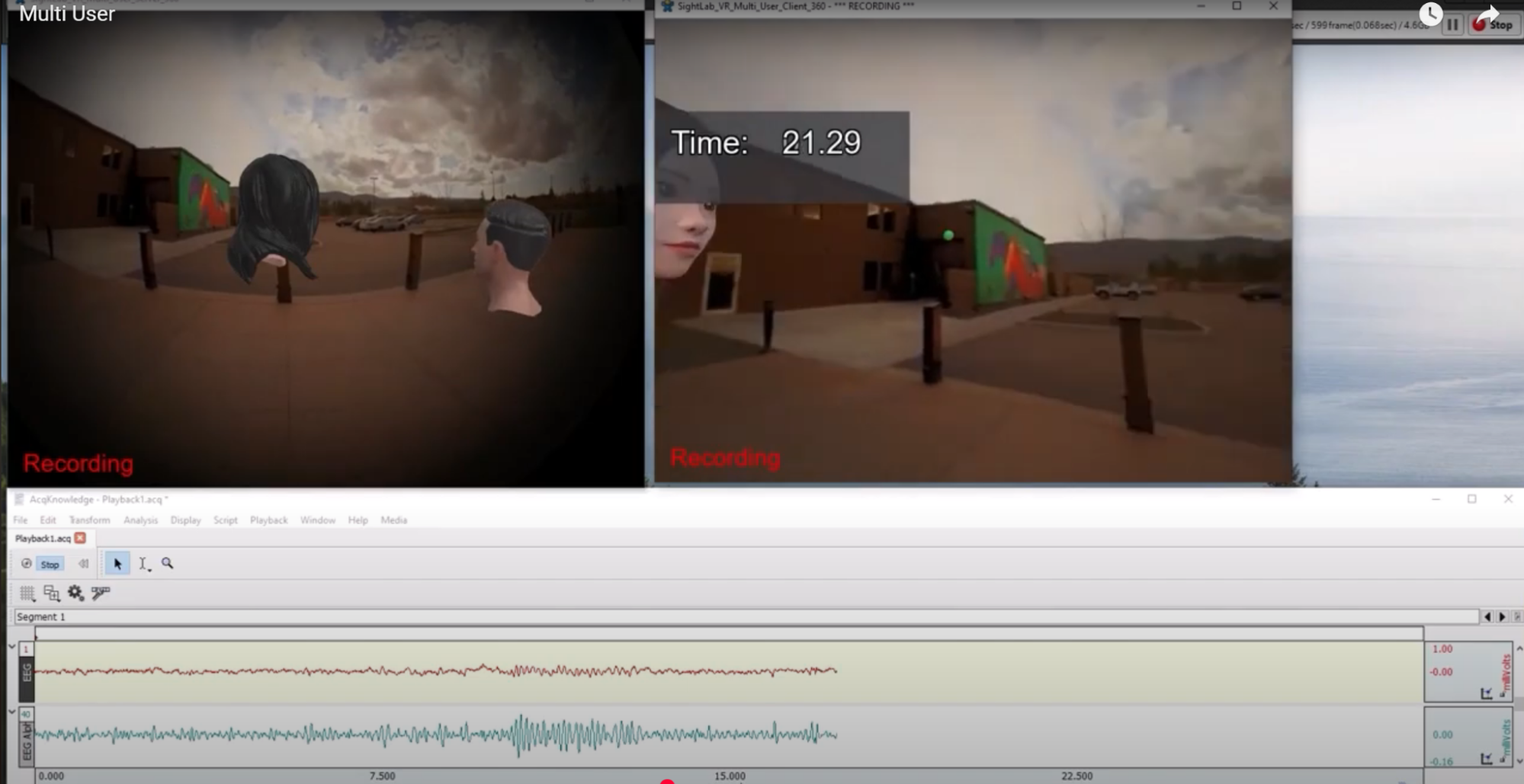

Researchers can later replay sessions with heatmaps, scan paths, dwell distributions, and per-client or aggregated views.

Multi-user setups can be combined with BIOPAC AcqKnowledge. Events from VR are synchronized with physiological recordings (heart rate, EDA, respiration), enabling researchers to correlate interaction patterns with autonomic arousal.

Additionally, SightLab supports Lab Streaming Layer (LSL), opening the door to simultaneous integration of third-party neuro and bio-sensors across participants.

Every multi-user session is saved as a replay file. This allows for:

This replay system makes it possible to revisit complex social dynamics frame by frame.

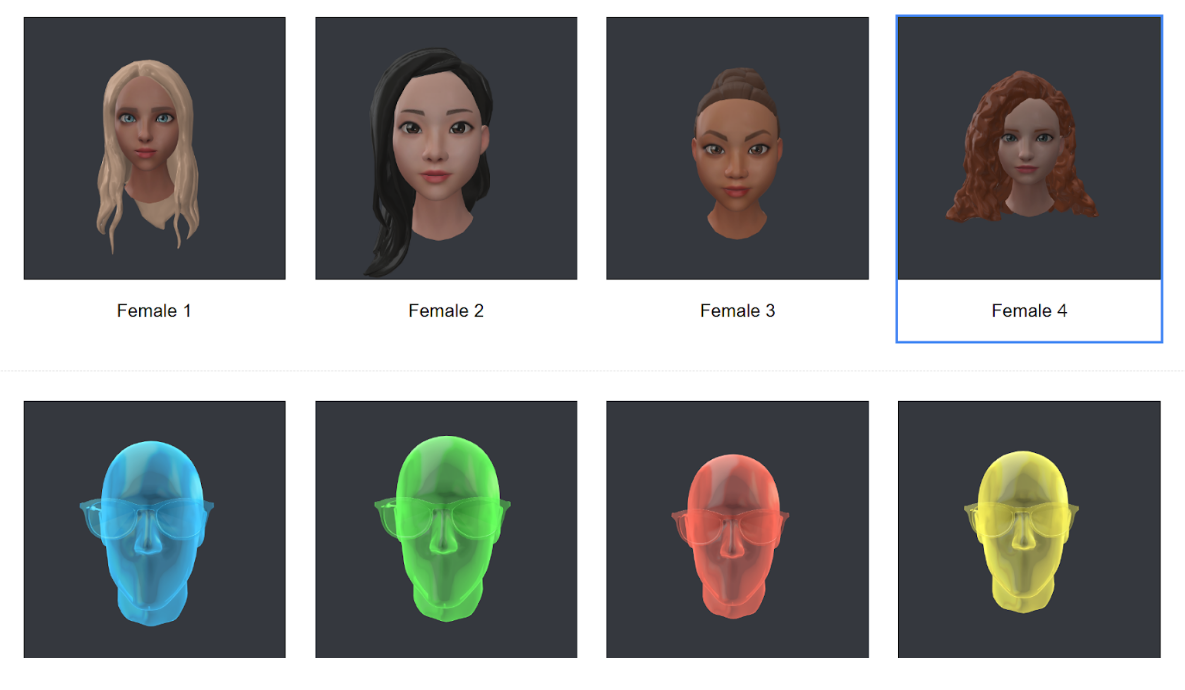

SightLab provides avatars with synchronized tracking of hands, feet, and head, as well as full body tracking (as of SightLab 2.6). Avatars can be customized (via ReadyPlayerMe, Rocketbox, Reallusion, etc.) and enhanced with AI Agents that interact conversationally in real time. Avatars can carry identifiers, speech, and even transcript logging.

Multi-user sessions are not limited to pure VR. SightLab supports passthrough AR for headsets like Meta Quest Pro/Quest 3 and Varjo. This allows mixed-reality studies where real and virtual participants interact seamlessly.

Shared experiences can include synchronized 360° videos, 3D model environments, or virtual screens for presenting media across clients. These setups allow group studies of media perception, cooperative problem solving, or joint training.

Through the External Data Recorder and custom APIs, researchers can log interactions with outside software, enabling hybrid workflows (VR plus desktop or mobile). Real-world objects can also be integrated into shared VR contexts.

To make getting started even easier, SightLab comes with an extensive library of example scripts, demos, and templates that showcase multi-user functionality. Researchers can use these as-is for pilot studies or adapt them as templates for custom multi-participant experiments. The included examples not only accelerate development but also demonstrate best practices for synchronizing interaction, logging data, and analyzing replay across multiple users.

Popular examples include:

These templates not only demonstrate how to implement synchronized gaze tracking, physiology, and replay in multi-user settings but also serve as flexible starting points for customized, domain-specific experiments.

Multi-user experiments aren’t limited to headsets. With Projection VR, you can combine head-mounted displays with large-scale 3D or 2D projected backdrops. This creates hybrid environments where some participants are fully immersed in VR, while others view and interact with the scene through projection. Additionally, WorldViz’s PRISM system enables touch-based interactive projection experiences, adding another layer of engagement. These setups are ideal for classrooms, group demonstrations, or research labs where accessibility and scalability are key.

Setting up a multi-user VR research lab can be complex - but WorldViz provides end-to-end support. From choosing the right combination of VR headsets, projection systems, and tracking hardware, to configuring the software for synchronized data capture and replay, our team can help design and install turnkey multi-user environments. We also offer consulting to tailor experiments for your specific research goals, whether in psychology, neuroscience, training, or collaborative learning.

Multi-participant SightLab experiments combine co-presence, behavioral data, physiology, and replay into a unified platform. Whether the goal is studying gaze coordination, synchrony of physiological arousal, group problem solving, or social interaction with AI avatars, SightLab delivers the tools for a new era of realism and data capture.

Ready to explore multi-user VR research?

Request a demo or contact us at sales@worldviz.com.