The integration of BIOPAC’s AcqKnowledge system with SightLab VR Pro and Vizard represents a breakthrough in how researchers can measure the mind–body connection inside immersive virtual environments. With seamless synchronization between physiological data and VR behavioral data, this combination gives scientists, educators, and clinicians an unprecedented window into how people think, feel, and respond to their surroundings in real time.

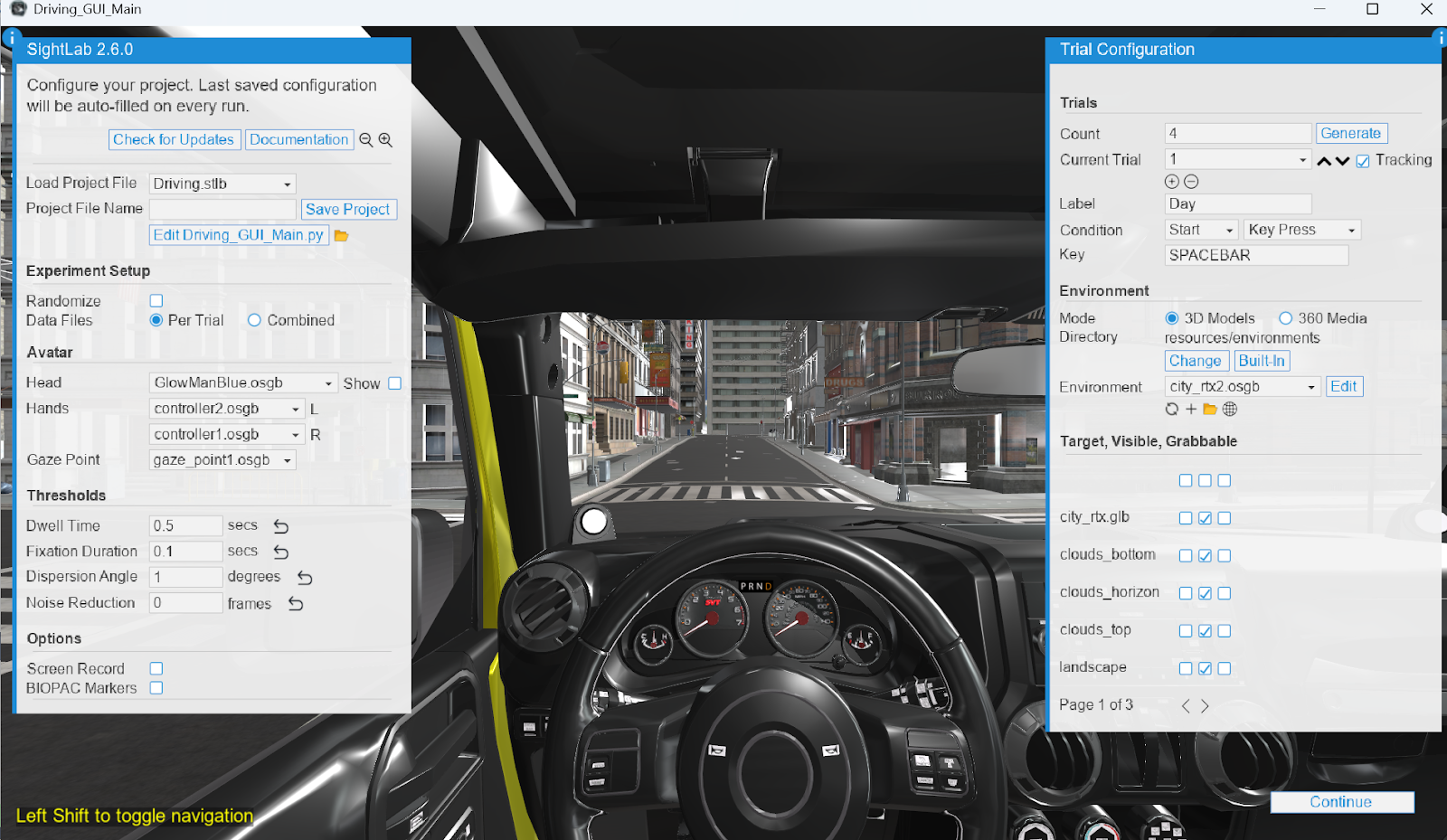

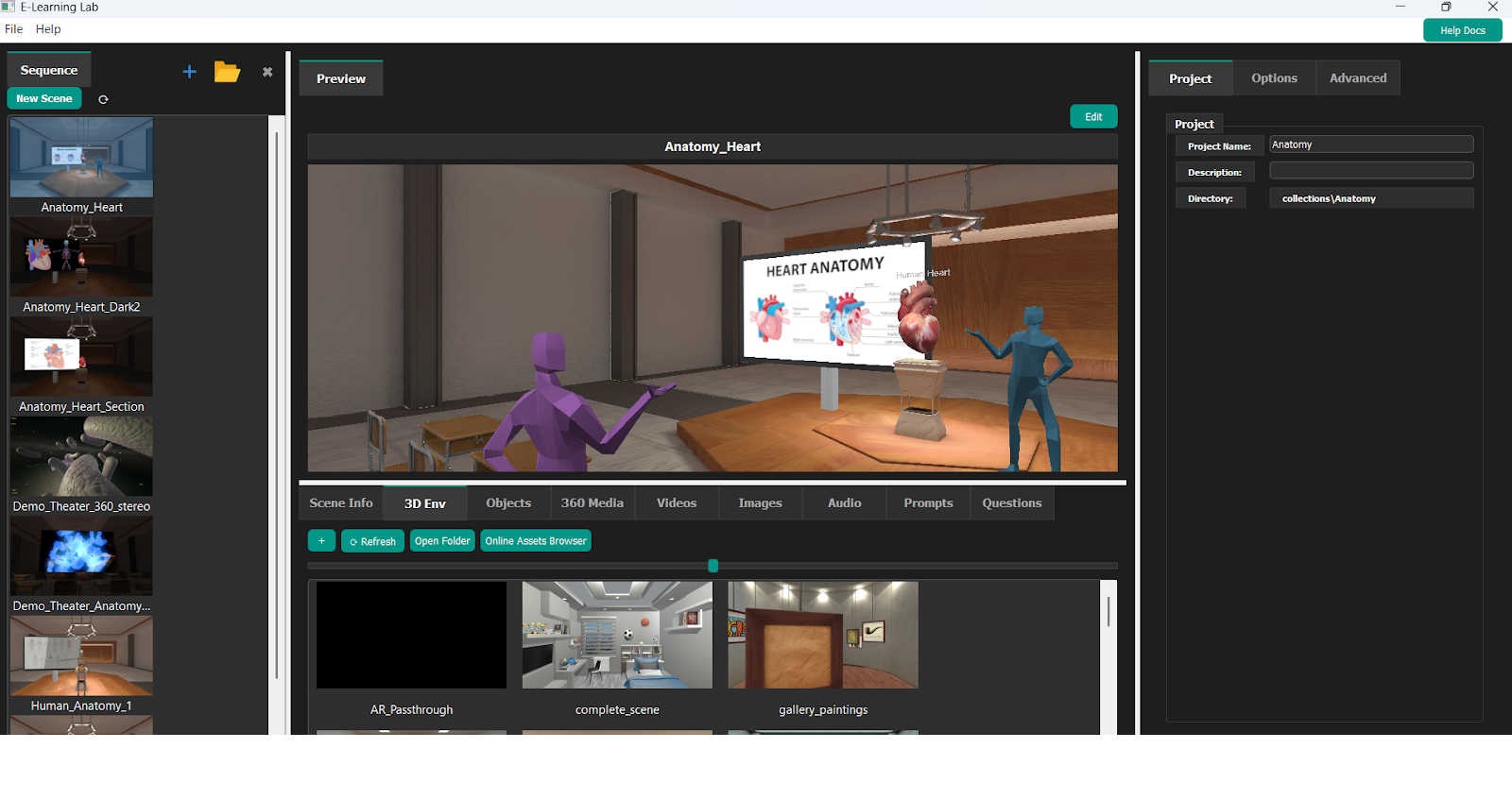

SightLab VR by WorldViz is an all-in-one platform for designing and running experiments in virtual reality built on the core Vizard platform, complete with gaze tracking, object interaction, and advanced replay analytics. When connected with BIOPAC, it evolves into a comprehensive psychophysiological lab in VR.

Through a single setup, you can track and synchronize:

Together, these create a holistic record of behavior and physiology — what the participant saw, how they reacted, and what their body was doing in the moment.

Setting up BIOPAC integration in SightLab takes just a few clicks. Researchers can simply enable Network Data Transfer (NDT) in AcqKnowledge, then check the “Send to BIOPAC” option in the SightLab GUI—or add a single line of code (sl.SightLab(BIOPAC=True)) for code-based experiments.

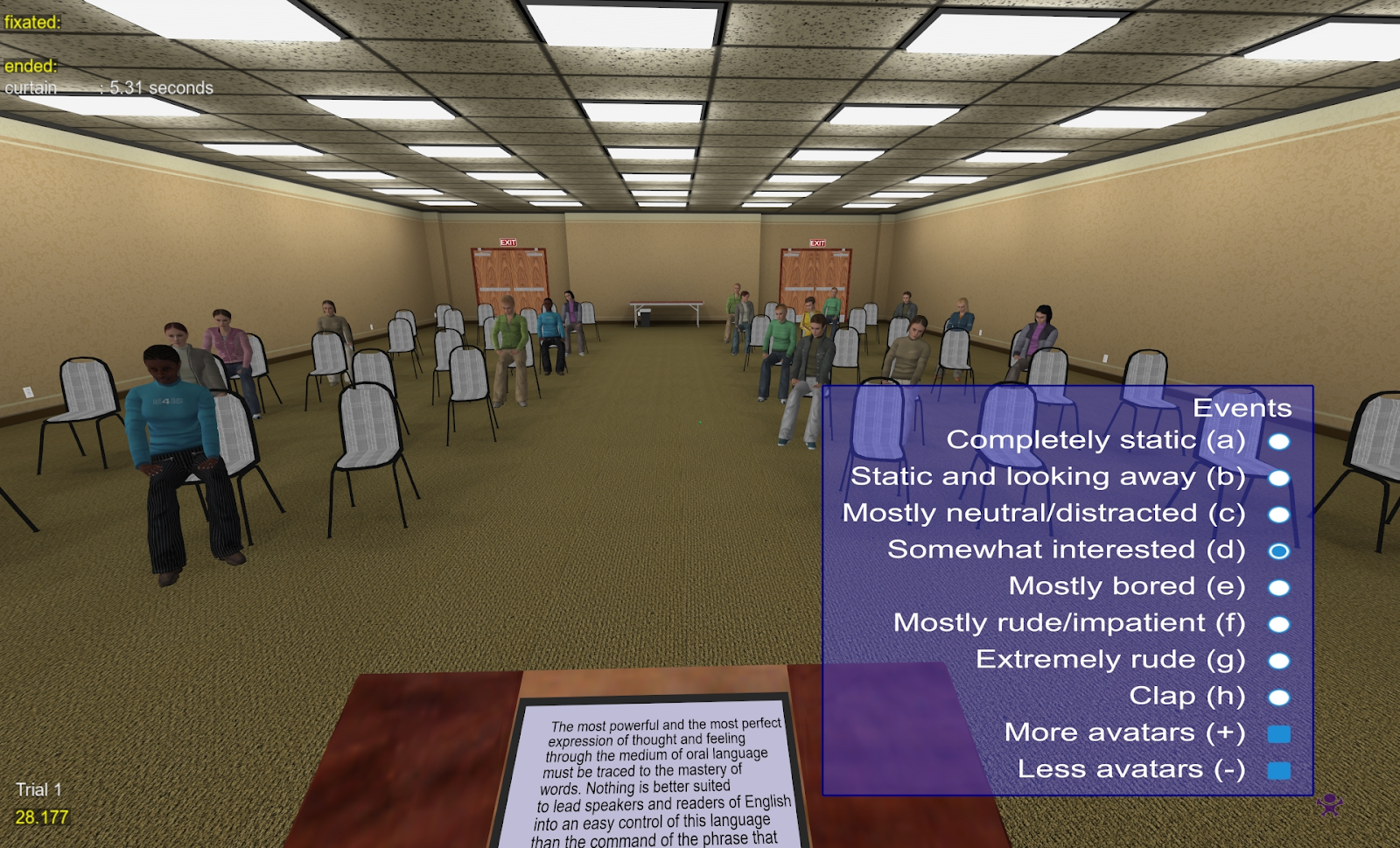

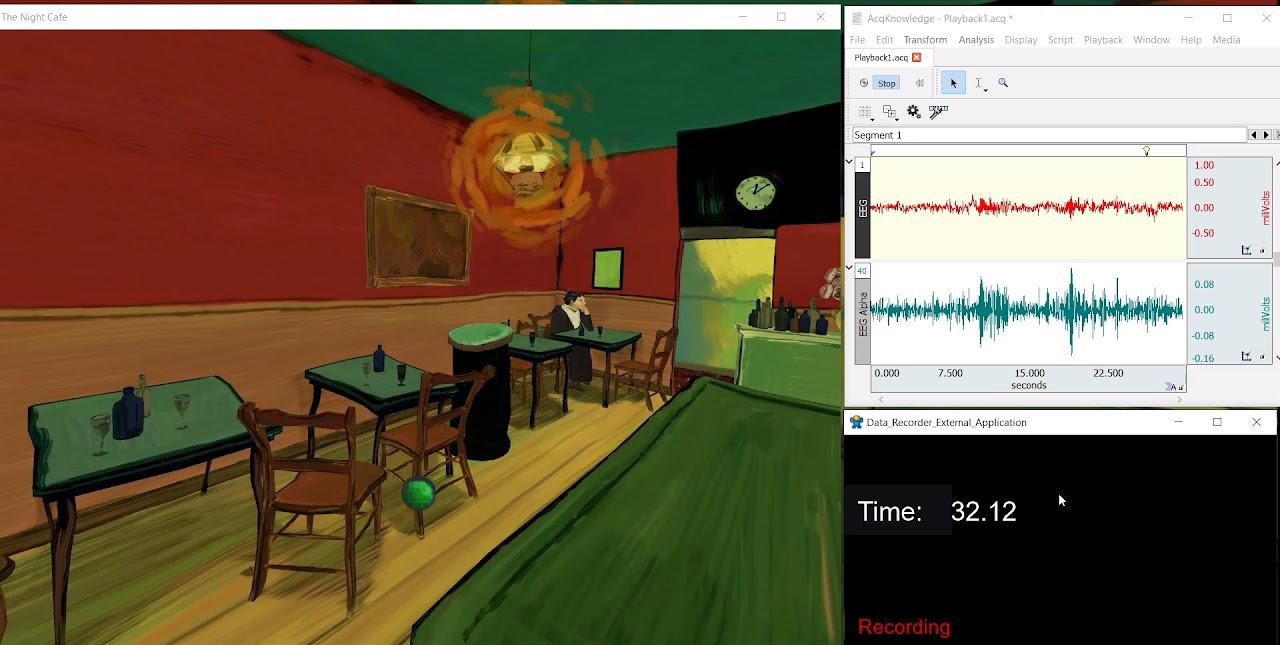

Once connected, SightLab automatically sends markers and events to AcqKnowledge, logging when a participant fixates on an object, grabs something, or reaches a trial milestone. These event markers appear in the AcqKnowledge timeline, aligning perfectly with recorded physiological traces.

Researchers can also send custom events—such as emotional triggers, task completions, or condition changes—directly into the BIOPAC graph for later analysis.

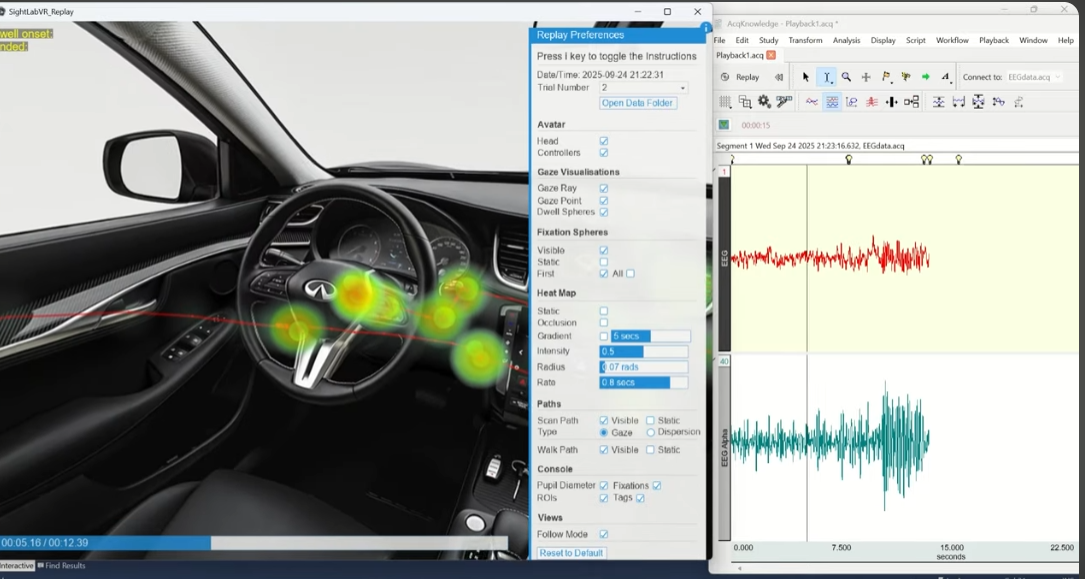

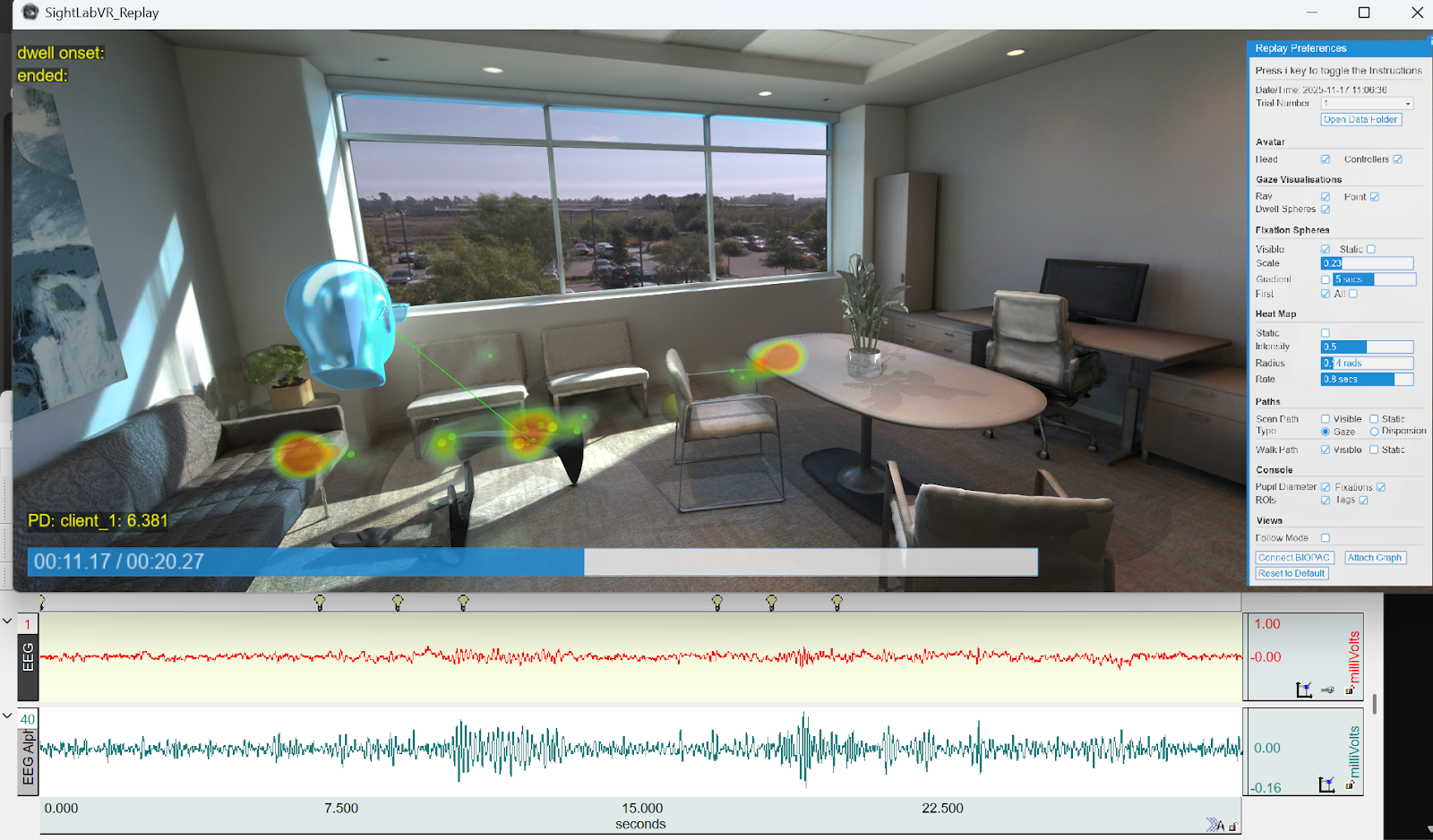

Every recorded session can be replayed inside AcqKnowledge alongside interactive replays or videos from the VR session. With the session replay viewer now able to directly connect with Acqknowledge, you can scrub through time, click an event, and see exactly what the participant saw and how their physiological state changed at that moment. This creates a powerful storytelling and analytical tool for studies in stress, learning, attention, and affective response.

With the latest SightLab release, this synchronization has become even tighter: the SightLab Replay Scrubber can directly control AcqKnowledge’s playback graph—allowing visualizations such as heatmaps, scan paths, and dwell spheres to be viewed in perfect sync with live physiological data.

SightLab’s multi-user mode allows multiple participants to share the same virtual environment—ideal for studies of social interaction, teamwork, or group dynamics. Each user’s physiological events can be streamed to AcqKnowledge locally or shared on a central BIOPAC server. This enables synchronized multi-participant data collection with unified event tracking and analysis.

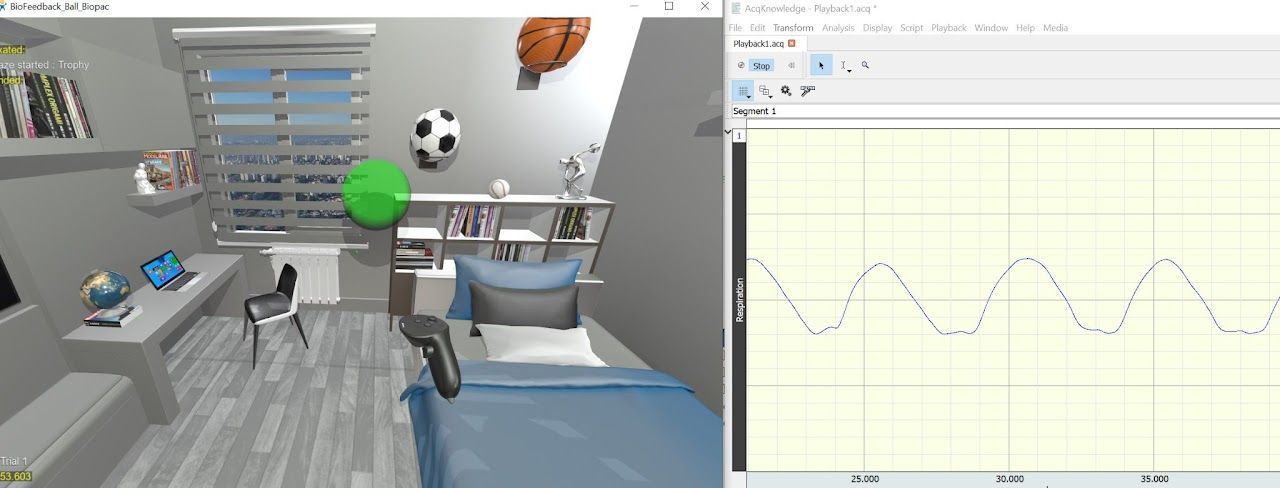

Researchers aren’t limited to passive observation. SightLab’s BIOPAC integration supports biofeedback loops—experiments where real-time physiological data drives the virtual environment itself.

Examples from the SightLab Example Scripts include:

By pairing these capabilities with BIOPAC’s range of sensors, researchers can study emotion, cognition, attention, and performance in ways that were once only possible in controlled lab environments.

SightLab’s data export capabilities let users easily merge these physiological streams with gaze, fixation, and behavioral data for integrated analysis.

The BIOPAC + SightLab integration is already empowering research across a wide range of fields:

With support for Lab Streaming Layer (LSL), external application data recording, and AI-powered analytics, SightLab continues to push boundaries in human data science. The integration with BIOPAC extends these capabilities to the physiological domain—making it possible to explore not just what people do in virtual worlds, but how their bodies and brains respond in the moment.

As virtual reality research evolves, the synergy between SightLab, Vizard, and BIOPAC ensures one thing: a deeper, richer, and more measurable understanding of human experience.

Learn More

🔗 WorldViz SightLab Documentation

🔗 BIOPAC Systems

To see how you can explore using Biopac and SightLab in your research or any questions related to setting up VR experiments and labs contact sales@worldviz.com.

To get a demo of SightLab click here.