Watch this YouTube video demonstrating the External Application Data Recorder:

https://www.youtube.com/watch?v=j63jZA9kPZI

The External Application Data Recorder enables you to record, save, and synchronize eye and sensor data while running external VR applications, including SteamVR games, Oculus apps, web-based VR experiences, or stand-alone/Android based applications (Note: stand alone doesn't include eye tracking). After a session you can run the replay and view the gaze point synchronized with the data. There is an experimental mode to use AI to look for view counts.

Install prerequisites

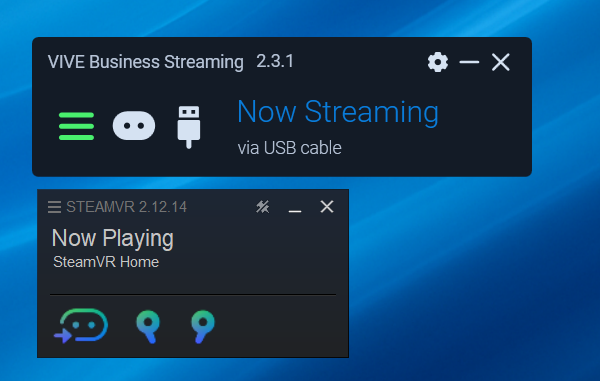

1. Setup Hardware and Launch External Application

Make sure your hardware/headset are connected and running and launch the external application you want to record data with (i.e., Unreal, Unity, SteamVR, etc.).

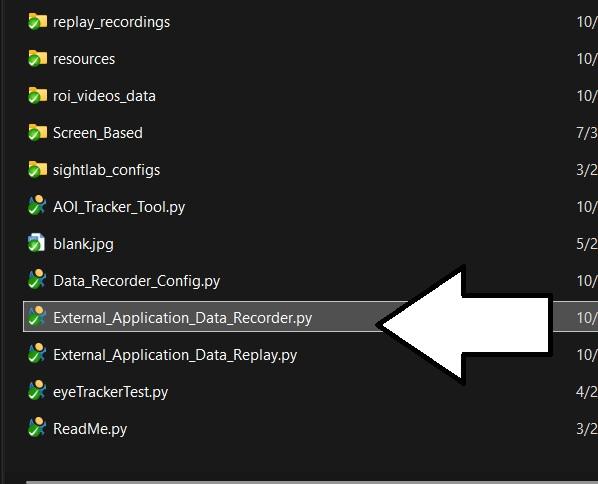

2. Launch the Recorder

Launch the External_Application_Data_Recorder.py file to get the recorder ready.

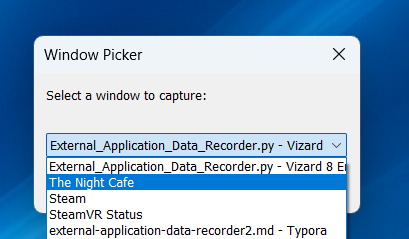

3. Choose Window Title

Choose the Window Title to capture.

4. Select Recording Length

Select recording length (seconds). You can end early by closing the window or pressing Space.

5. Choose Your Hardware

Select the hardware profile you’re using.

6. Start Recording

Put on the headset and press Spacebar (default; configurable). If connected to Biopac, AcqKnowledge transport starts. On supported HMDs you’ll see a live gaze point on the mirrored window.

7. Finish & Save

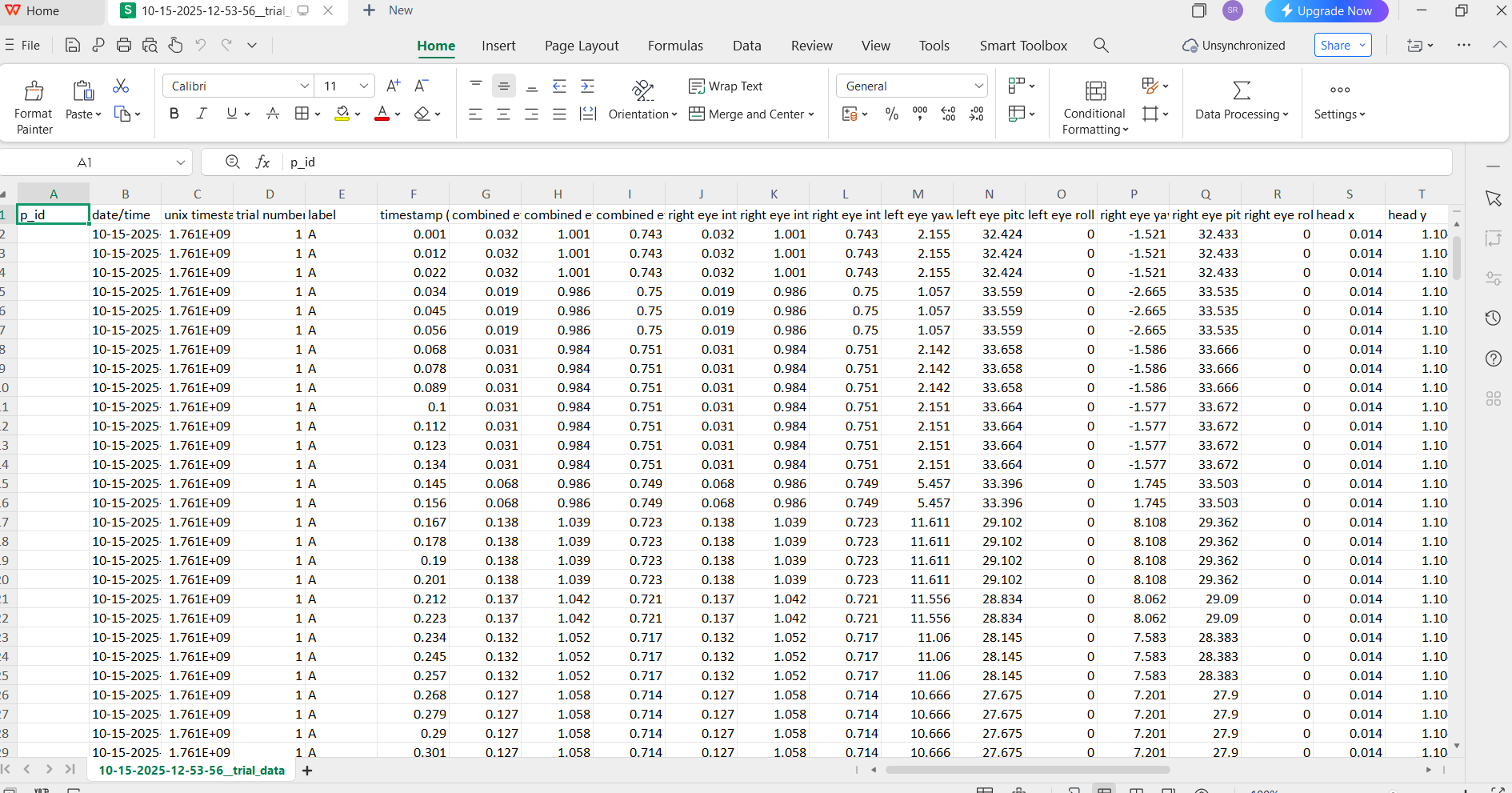

When the timer ends you’ll hear a beep. Data goes to the /data folder.

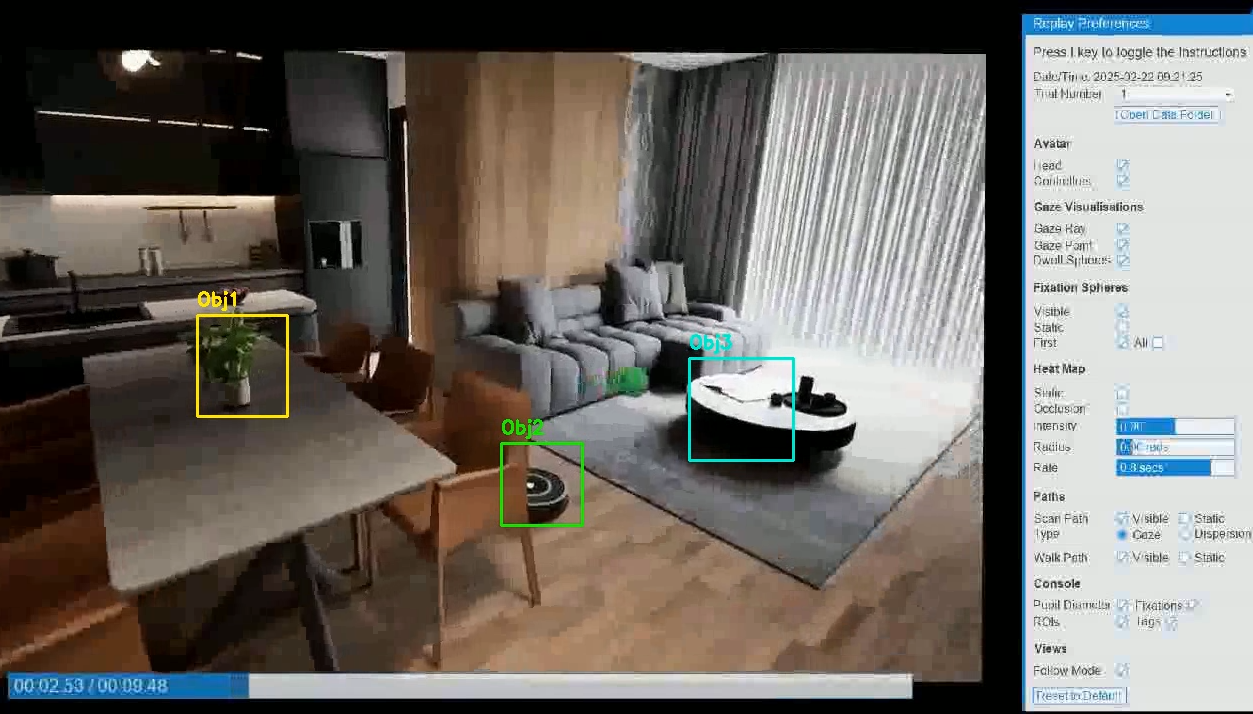

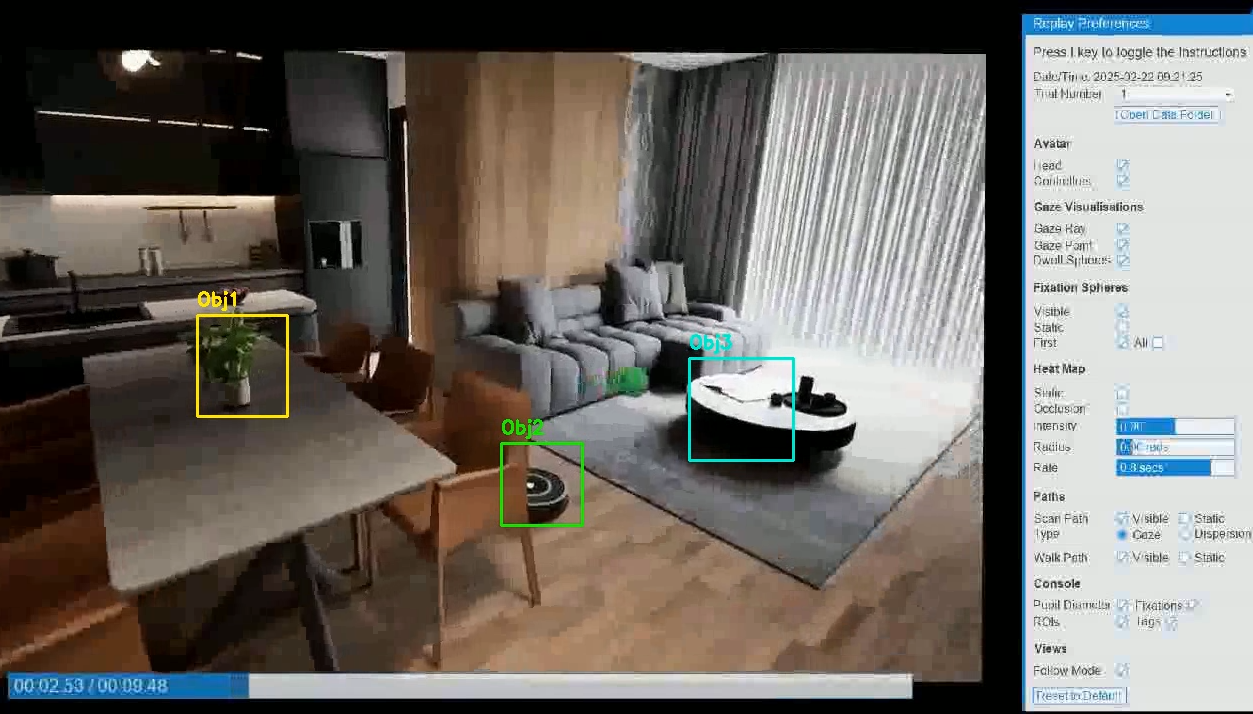

8. Replay Your Session

View a replay with visualizations by launching the External_Application_Data_Replay.py file and choose your session. (If the replay crashes, you may need to run convert_video_to_fix.py and convert the video.)

9. Scrub Through Playback

Use the scrubber in the replay to scrub through the playback. Press the 1 and 2 keys to synchronize moving the scrubber in AcqKnowledge if using version 6.02 or higher. (See the Replay page for more information and controls.)

10. Toggle Visualizations

Toggle visualizations such as scan path, fixation spheres, and the heatmap in the replay. (You may need to toggle items first to see the visualizations. Visualizations other than gaze point are not available for stand-alone Android-based applications.)

11. Export Gaze-Overlay Video

Press 4 in the replay to record a video with the gaze point (plays through in real time). Press 5 to stop. This saves in the replay_recordings folder. Open the recorded video in AcqKnowledge to see synchronization with physiological data. (See sending-events-to-biopac.md for details.)

12. (Optional) Experimental AOI Tracker

Run the Experimental AOI Tracker Tool to tag objects or regions to follow (see below).

See here for a full list.

Eye/Gaze

Physiology (when available)

Events & Timing

Video & Sync

Note: Standalone/Android via casting records head pose only; no eye vector or object intersections.

# ----- Biopac & Network Sync -----

BIOPAC_ON = True # Communicate with Biopac Acqknowledge

LOCK_TRANSPORT = True # Lock the transport

NETWORK_SYNC_KEY = 't' # Key to send event marker to Acqknowledge

NETWORK_SYNC_EVENT = 'triggerPress' # Event trigger for marker

USE_NETWORK_EVENT = False # Send network event to external app

NETWORK_START = False # if True, use a network event to start (instead of START_END_SESSION_KEY)

# Network host/port and JSON event names (must match Unity/Unreal sender)

NETWORK_HOST = 'localhost'

NETWORK_PORT = 4950

NETWORK_START_EVENT_NAME = 'start_trial'

# ----- Data Recording -----

RECORD_VIDEO = True # Enable video recording

RECORD_VIDEO_OF_PLAYBACK = True # Record during replay

RECORD_GAZE_MAT = True # Save gaze matrix data

RECORD_FACE_TRACKER_DATA = False # Save facial expression data

OLDER_SIGHTLAB_PID = False # Use older participant ID format

# ----- Video Recording Method -----

# "OPENCV" = Compressed videos (~50MB/min), may crash after ~10min

# "VIZARD_BUILT_IN" = Uncompressed (~400MB/min), stable for hours, records Vizard window only

# "SIGHTLAB_BUILT_IN" = Vizard built in with compression - Can take some time to compress

# "IMAGEIO_FFMPEG" = External window capture via imageio-ffmpeg, may crash after ~10min

SCREEN_RECORDER_TYPE = "IMAGEIO_FFMPEG"

# ----- Video Quality Settings -----

VIDEO_RECORDING_WINDOW_HEIGHT_NEW = '1920'

VIDEO_RECORDING_WINDOW_WIDTH_NEW = '1080'

# ----- Timer & Session Control -----

USE_TIMER = True # Use timer instead of keypress to end trial

USE_TIMER_DROPDOWN = True # Show dropdown to select timer length

DEFAULT_TIMER_LENGTH = 10 # Default timer length (seconds)

START_END_SESSION_KEY = ' ' # Spacebar to start/stop trial

PLAY_END_SOUND = True # Play sound at end of trial

TRIAL_CONDITION = 'A' # Default trial condition label

SET_NUMBER_OF_TRIALS = 1

# ============================================================================

# REAL-TIME STREAMING SETTINGS (During Recording)

# ============================================================================

REAL_TIME_STREAMING = True # Show live window capture in VR during recording

# ----- Texture Alignment (if video appears offset) -----

# Use keyboard controls to adjust, then copy values here:

# Arrow Keys: Move texture | Numpad 4/6/8/2: Scale texture

# P: Print values | R: Reset to defaults

TEXTURE_OFFSET_X = 0.0 # Horizontal offset

TEXTURE_OFFSET_Y = 0.0 # Vertical offset

TEXTURE_SCALE_X = 1.0 # Horizontal scale

TEXTURE_SCALE_Y = 1.0 # Vertical scale

TEXTURE_ADJUSTMENT_STEP = 0.05 # Adjustment increment per keypress

# ============================================================================

# REPLAY SETTINGS (During Playback)

# ============================================================================

HIDE_REPLAY_GUI = False # Hide SightLab's replay GUI

FOLLOW_ON = True # Enable first-person view in replay

REPLAY_SECOND_GAZE_POINT_OFF = True # Default True

override_screen_position = True # Flag to control whether we override replay position

override_real_time_position = False # Default False

# ----- Screen Position & Size -----

# Adjust these if the replay video appears misaligned or wrong size

# Use keyboard controls during replay to find perfect values:

# Arrow Keys: Move | PgUp/PgDn: Depth | +/-: Width | [/]: Height

# K: Print values | L: Reset | O: Toggle override

# ----- Viewpoint Adjustment -----

# Fine-tune camera position/rotation for optimal replay viewing

# ============================================================================

# PROFILE MAPPING AND PRESET CONFIGURATIONS

# ============================================================================

See the Config file for the full profile mapping list you can adjust

When to switch recorder type • VIZARD_BUILT_IN: rock‑solid for long runs (uncompressed, big files). • IMAGEIO_FFMPEG/OPENCV: smaller files, may be less stable after ~10 min. • SIGHTLAB_BUILT_IN: Vizard built‑in with compression.

Requires openai key:

Keys & Tokens Set API keys globally (Windows cmd): setx OPENAI_API_KEY "your-key"

Restart Vizard after setting. AI image analysis can be token‑heavy; consider sampling every N frames and using a dwell threshold (e.g., ≥15 frames at 30 fps ≈ 0.5 s).

Where are my files? All sessions go under /data/<date>_<participant>/

Why does the replay look stretched/offset? Use replay hotkeys (arrows/pgup/pgdn/+/-) to dial in the screen; then copy printed values into the config

Can I start/stop with the network? Yes—enable NETWORK_START=True and match event names/host/port with your Unity/Unreal sender.

For more information about how you can use WorldViz tools for VR research contact sales@worldviz.com

For a demo of SightLab and Vizard click here.